Racism is no glitch, Benjamin tells science writers

Ruha Benjamin presenting the eighth Patrusky Lecture online on Oct. 21, 2020.

Presenting the annual Patrusky Lecture at ScienceWriters2020, a prominent Princeton social scientist argued that technology, often seen as the solution to many of the world’s problems, instead reinforces and itself creates harmful social inequalities.

In a presentation to hundreds of science journalists and communicators Oct. 21, a prominent Princeton social scientist argued that technology, often seen as the solution to many of the world’s problems, instead reinforces and itself creates harmful social inequalities.

Delivering the Patrusky Lecture at the ScienceWriters2020 virtual conference, Ruha Benjamin, sociologist and associate professor of African American studies at Princeton University, focused on the intersection of race with the culture and tools of science and technology.

“We’re socialized to think of [technology, science, and medicine] as neutral and objective, standing above society in its own little bubble,” said Benjamin. “In the last few years, that bubble has been burst.”

Benjamin was the eighth distinguished speaker selected to present the annual lecture honoring Ben Patrusky, a science writer and retired executive director of the Council for the Advancement of Science Writing (CASW). The lecture is part of CASW’s New Horizons in Science briefings. CASW organizes ScienceWriters conferences with the National Association of Science Writers.

Science has ‘created the alibi’ for racial violence

Scientists and new technologies such as social media are not immune to the insidious and pervasive ways of structural racism, she says, and their procedures and algorithms have—intentionally or not—served to strengthen racial stereotypes and support structural racism.

As recent example, Benjamin cited the preliminary autopsy report that blamed the death of George Floyd, a Black man killed in Minneapolis last May when a police officer knelt on his neck for more than eight minutes, on underlying conditions rather than the direct and excessive use of deadly force by a policeman. “It’s a miscarriage of justice that deepens the cut; not only can Black people be killed with impunity; a physician’s autopsy report can be twisted to replace the truth,” wrote a group of physicians at the time.

Using science to frame narratives in the way the initial autopsy report did, Benjamin said, legitimizes racial bias, racist perceptions, and violence. “Science has not simply been a bystander to racial violence, but has, in many ways, created the alibi,” she said.

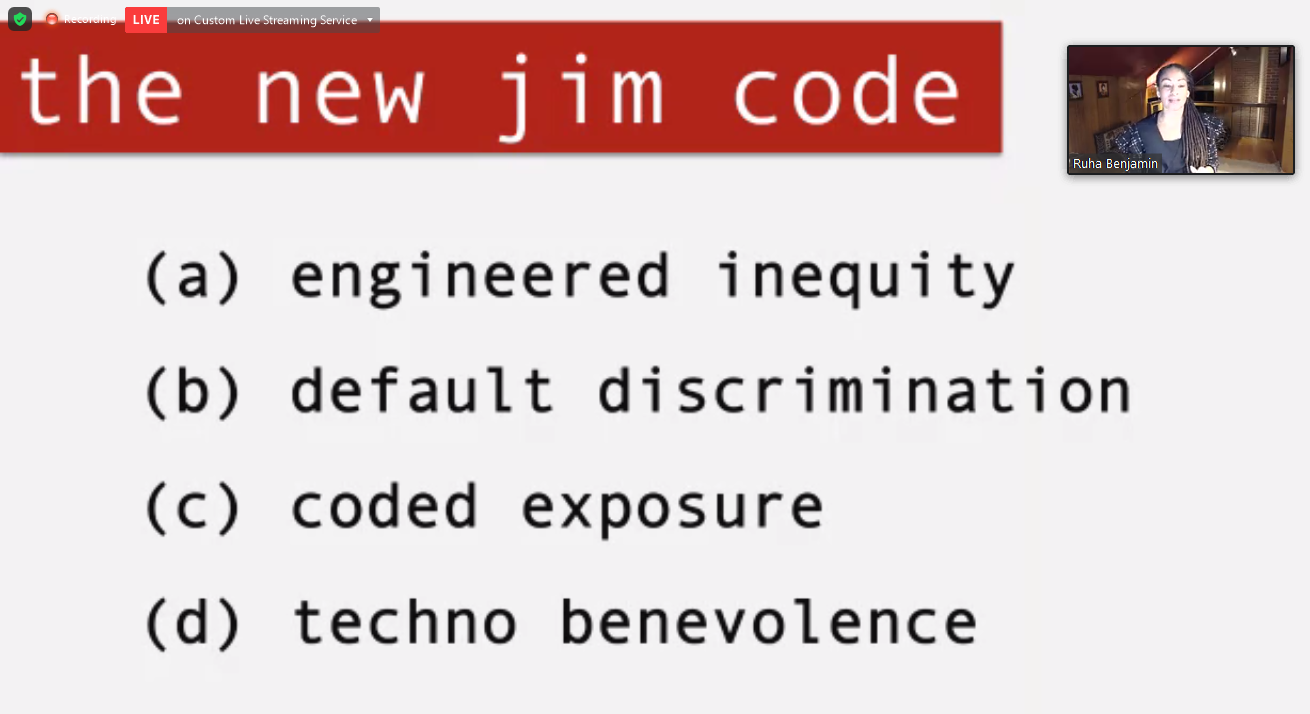

In recent years, Benjamin has studied how digital technology has become the main agent of racial bias and explored this theme in her book Race after Technology: Abolitionist Tools for the New Jim Code. As one of several examples, she noted that a Google search for “unprofessional hairstyles” turns up a preponderance of images of Black women, while a similar search for “professional hairstyles” turns up more images of white women. The “New Jim Code,” a term conceived by Benjamin that plays off of Jim Crow laws that enforced racial segregation, refers to the way technologies that seemingly objective and neutral technology tools combine with built-in human biases to reinforce inequality.

In her lecture, Benjamin noted that two wildly different portrayals of new technologies in news media—Hollywood’s and Silicon Valley’s—have a shared and troubling root. Hollywood has created a vision in which robots and other computerized tools wickedly take over the world. Silicon Valley envisions a world in which they save humanity. Both views, she said, are rooted in “techno-determinism,” a failure to spotlight and hold accountable the people behind the computer screen and the technology.

Benjamin called for further and wider examination of techno-deterministic framing to better understand not only how technology upholds racism, but also how racist ideology informs technology development.

Social scientists and others need to think about both outputs, in this case racist technologies and their impacts, and inputs: the ideologies, social values, and assumptions that are fed into the development of new technology by those producing them, she said. Racially biased outputs, she emphasized, are a mirror reflecting the inputs.

Compounding the problem, Benjamin said, is the veil of “objectivity” around science and its methods. For example, in a 2019Science commentary she described a study of a so-called race-neutral algorithm used to determine appropriate levels of health care using predictions of the cost of care as a proxy for health needs. The study found that Black patients assigned the same level of health risk as white patients were in reality much sicker. Because the tool’s design did not take into account racial disparities in health care, it was bound to lead to poorer outcomes for Black patients, she says.

Benjamin said technologies can be improved but are not the ultimate solution to structural racism in science or media. “If only there was a way,” she said, “to slay centuries of racist and sexist demons with a social justice bot.”

Technology: never bias-free

What is needed, she said, is greater awareness of the context in which science and technology operate. Without such awareness, science communicators risk perpetrating and upholding racial biases and disparities, and producing work that is not authentic to minority audiences. Without such context, scientists, in a similar way, are “likely to produce knowledge that is not only not accurate, but harmful,” she added.

Through their work, both scientists and journalists can shine a light on racial disparities, Benjamin said, but only if they acknowledge and account for the large, murky gap between data and what people perceive. “We have to care as much about the stories that we’re telling as we do the statistics,” she said.

“Today we’re taught to think of racism as an aberration, a glitch, an accident,” she said. Rather, Benjamin countered, racism is systemic and embedded deep within our social systems and technologies. Therefore, technology, as a human enterprise, will never be completely bias-free. Rather, tools, data, and technology should be used to hold those in power accountable.

“This is a way of shifting the lens, shifting the attention. It’s not bias-free. It’s cognizant of the power dimensions and hierarchies in our society, and it’s strategically deciding: We are going to build tools that empower the marginalized.”

A video recording can be found on the Patrusky Lecture page on this website.