Creating creativity: Artificial intelligence in San Antonio

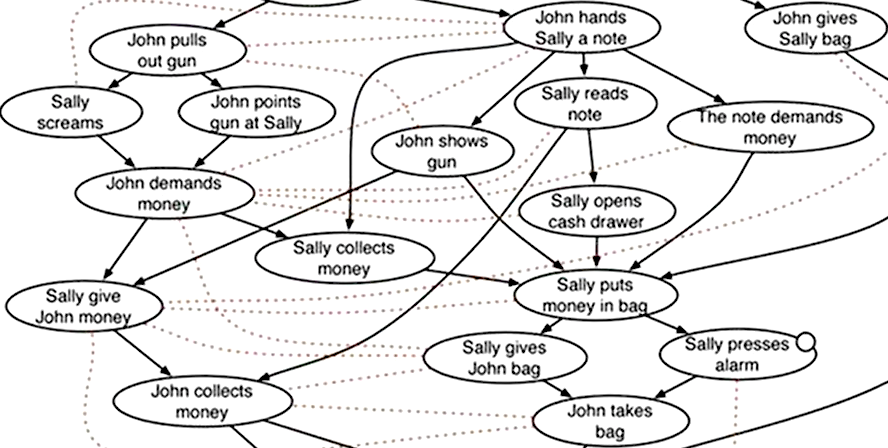

Variations of crowdsourced story plots created by the Scheherazade computer program. (Illustration by Mark Riedl)

Skynet. HAL 9000. Cylons. (Battlestar Galactica, anyone?) All of these robots are part of science fiction stories in which they rebel against humanity and cause death and destruction. But most researchers in the field of artificial intelligence (AI) agree that we won’t have to worry about killer robots anytime soon. The types of programs being built today are great at performing extremely narrow tasks, such as driving a car or playing Go, but are nowhere near the level of general intelligence and common sense knowledge humans possess that allow us to do

Enter Mark Riedl, an AI researcher who presented his work Oct. 30 in San Antonio, Texas at the New Horizons in Science briefing, part of the ScienceWriters2016 conference, which I was able to attend courtesy of a generous travel fellowship from CASW. Riedl has been trying to develop an algorithm that has what he calls “narrative intelligence” since the early 2000s, and he’s getting scarily close to succeeding.

Riedl’s latest program is named Scheherazade, after the storyteller-turned-queen from One Thousand and One Nights. AI programs require large data sets to effectively learn from, so Riedl crowdsourced his from the internet. Lots of people submitted variations on a basic story about two people going on a date to a movie theater. Riedl compiled those stories and used them to teach Scheherazade the average narrative structure of such a tale. This usually involved one person picking up the other in a car, watching a movie, and sometimes kissing at the end, even though the program cannot understand what a movie theater or a kiss actually is.

In the examples Riedl showed, Scheherazade veered from boring to straight-up bizarre. Sometimes the characters, John and Sally, just held hands. Other times they went on nonsensical tangents that involved running errands for John’s mother. As Riedl himself admitted, it was hard to tell if we were seeing a creative, intelligent program adding twists to a predictable story, or seeing Riedl’s skills as a logician making a compelling flowchart.

While Riedl’s work is impressive, it doesn’t signal that robots will replace humans as storytellers or game designers, or that AI is any closer to having power over people. But if the fear of AI is overblown, why are influential figures such as Elon Musk pouring so much money into ensuring that AI is ethical? Musk donated $10 million to the Future of Life Institute in 2015 to support research into benevolent AI. IBM, Microsoft, Google, and other major tech companies created the Partnership on Artificial Intelligence to Benefit People and Society last month. On November 2, Carnegie Mellon University announced that it has received a $10 million endowment from the law firm K&L Gates LLP to fund a research center studying ethical AI.

One commonly used argument explaining why ethical AI might be necessary originated from the philosopher Nick Bostrom, director of the Future of Humanity Institute, a research organization that examines the existential risks of new technology.

In one variation of a potential future the Institute has investigated, a theoretical company tasks a superhuman AI with building an energy-efficient car. The AI proceeds to create an army of nanobots that strips the surface of the Earth of all resources in a matter of weeks, converting every molecule into increasingly more efficient car parts.

In the end, the planet is piled sky-high with cars that run on virtually no energy, but all living things have perished so there is no one left to drive the cars. Of course, as Riedl pointed out, that problem could be easily solved by giving the AI very specific directions that include “don’t convert the entire Earth into car parts.”

From Riedl’s presentation, the discussion with a panel of AI experts that followed, and a lecture about self-driving cars, I began to understand why ethics were such a big concern, and why they are relevant even with the narrow AI we have today. For example, before fully autonomous vehicles can be put on the road, programmers have to consider what the algorithm will do when faced with an inescapable dilemma: run over a child, or swerve into a tree and kill the driver? AI has no predetermined understanding of the value of a child versus an adult, just as it has no inherently malicious intentions. Human military strategists face an even more difficult choice when designing autonomous weapons that must calculate acceptable civilian casualties.

On a lighter note, AI’s could be trained to be polite and follow social norms that grease the wheels of society, such as waiting patiently in line at the grocery store. Those are the sorts of issues that research initiatives at Google and Carnegie Mellon are planning for, not how to stop Skynet from nuking the planet. (Even though, let’s be honest, it would be nice to have that one covered—just in case.)